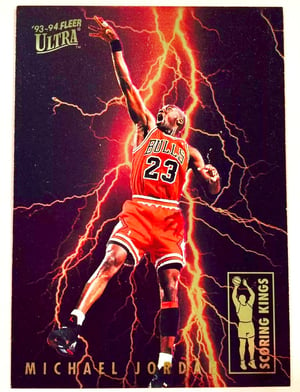

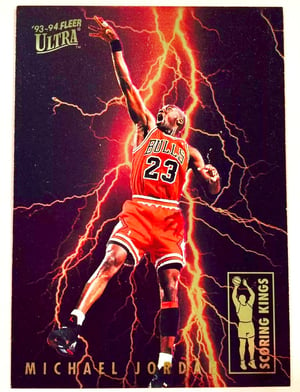

I’ve collected sports cards ever since I was 11 years old. Something about amassing and organizing a collection of cards that chronicled the bios and stats of my favorite sports stars always appealed to me. Included early on in this collection pursuit was a bonus lesson on the basic laws of supply and demand – generally speaking, the tougher the sports card is to pull from a pack of unopened cards, the higher the market value. The most popular sports stars create the most demand, also driving market value higher. I still remember the exact moment 25 years ago in 1993 when I pulled a rare (at the time) card of Michael Jordan from a pack of basketball cards – the perfect combination of scarcity and star power! The card immediately vaulted to the top of my collection.

Our approach to the Cognitive Collector persona and components within our Cognitive Computing Platform suite is inspired by this real-life passion with collecting. In the world of the Kingland Platform, the Cognitive Collector is primarily focused on one thing – collecting as much useful and valuable unstructured content (data) as possible. The parallels with my sports card collecting hobby are fun:

- Highly used, popular content (e.g. Securities and Exchange Commission public filing documentation) is valuable because of the demand. It’s even more valuable if collected, organized and processed in a manner for easy “downstream” consumption. Unlocking the data within popular content for easy consumption is a critical requirement for delivering a return on investment from leveraging the Collector and our Cognitive Computing suite, much like professionally grading a sports card to achieve its highest market value potential.

- Rarer content has value as well, but the value is typically driven by demand. Think of my Michael Jordan sports card example, and if that card had been the 12th man on the Chicago Bulls’ roster, instead of Michael Jordan. A rare card depicting a less popular athlete would still have value to a collector looking to gather the entire set of those rare cards. Similarly, our Cognitive Collector aggregates a complete set of content, for example, to corroborate company acquisition event information across multiple sources, or to ensure all regulatory filing documentation is collected for a monitored entity.

- Both rare and abundant content could have value even if there isn’t strong demand initially. This essentially is the “all data has value” point of view and speaks directly to the concepts of our Collector. For example, an article mentioning an event related to some obscure legal entity within an industry trade journal may not have strong value today, but tomorrow it could when it becomes the counterparty in an important relationship or transaction. The sports card hobby has direct parallels – modern basketball superstars such as Steph Curry and Russell Westbrook took time to develop into their stardom, and the demand for their rookie cards followed a similar growth pattern.

How does The Collector Work?

We’ve cloud-optimized our Cognitive Collector by leveraging our partnership with AWS, and architecturally, the Collector is built as a full data lake pattern using AWS technology. Amazon Simple Storage Service (S3) stores all content collected by the Collector and provides excellent resiliency, durability, accessibility, flexibility, and security for managing all content. AWS Elasticsearch Service provides the indexing and search services needed for searching the collected content in a scalable and secure manner. DynamoDB provides the NoSQL persistence capabilities for all of the metadata of the content, including the tagging and annotations that are output as part of our Cognitive Scholar’s processing that we’ll review in future blogs.

The Collector also satisfies key architectural principles that we required for support of content collection capabilities:

- Data Access Standardization: We put an emphasis on building an appropriate set of REST API endpoints, as a common layer for surfacing the content and data stored by the Collector in the data lake. Scalability is handled via an AWS Application Load Balancer that fronts a Python Flask web framework contained within a Docker container.

- Collection Extensibility: Knowing that content may come into the data lake via a number of different channels, we sought to build extensibility and overall flexibility into the Collector. Separate modules within the Collector are containerized and handle different channels of information – for example, web crawling with StormCrawler, RSS feed processors, social media API processors, custom content uploaders, etc. The containerized approach enables scalability as well.

- DevSecOps Excellence: Adding an emphasis of security to DevOps, we needed to ensure that our pipeline of build-to-deployment automation had security in mind. Each of the core AWS technologies leveraged for our Collector and data lake (S3, Elasticsearch service, DynamoDB) have security inherently built in, and our Data Access Standardization enables stronger data access authorization. Additionally, our DevSecOps capability allows us to efficiently deploy private data lakes for utilization by the Collector for non-public content use cases.

To close with my customary pop culture reference, we’re back to the Marvel Universe and the character sharing the same name as our Collector component. With every Collector naturally comes a basic set of “scholarly” knowledge, as we see in Marvel’s Collector and his knowledge of his collection, and particularly with the Infinity Stones he’s pursuing. Join me next time as we dive deeper into the next component persona of our Text Analytics Platform – The Scholar!

No Comments Yet

Let us know what you think