The extensive amount of documents filed daily around the world is something of a mystery. To help build some perspective, in 2018, the Securities & Exchange Commission (SEC) had 6,800 annual reports filed within the United States. In total, more than 650,000 documents were filed with the SEC last year, which include a wide variety of filing types and content. Now imagine expanding this across federal agencies in almost 100 additional countries, while also including press releases, news sources, corporate websites, and more. The amount of documents and articles becomes overwhelming.

Every company has specific business needs that are impacted by data attributes and information contained within unstructured text. Sometimes these are internal documents that a company has on hand, but otherwise the data is found externally, somewhere within the universe of filed documentation.

How does one identify which documents are relevant to their specific needs?

Filter the Noise

One solution requires filtering out the noise by identifying documents pertaining to a specific organization, person, or event (such as a corporate action or risk event) using simple keywords. Think of it as using the keyboard command of Ctrl+F to search a document for a specific term, but having the ability to execute this action across a vast amount of documents all at once. Take the 650,000 documents filed with the SEC in 2018. With properly tagged meta-data stored in a cloud-hosted data lake, these documents can be filtered down to a few hundred of interest in a matter of seconds. Most businesses are stuck in the perpetual rut of manual validation, but the slightest improvements in automation can significantly boost efficiencies. The automation of event or attribute detection is not conceptually complex, but implementing a simple filtering mechanism of terms can greatly reduce an expansive set of documents to a manageable size.

So what are the correct keywords and phrases to use?

Keywords and Phrases

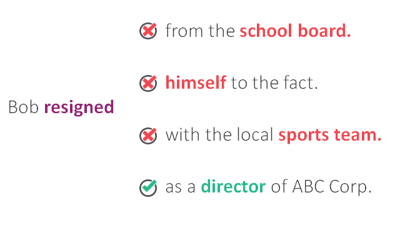

At times it may be straightforward, but event and attribute detection takes more configuration and expertise than one may think. For example, say a company wants to identify documents where a director or officer has changed, which would be considered the event. One can not simply search the phrase "director change," given the English language is much too intricate for literal matches. When a board member change occurs, it's usually referred to as an action like "resigned," "terminated," "retired," "appointed," or "elected."  This would require the keyword to reflect an action, while a second keyword would determine the type of individual being changed, such as a "director," "officer," or "member of the board." This combination of keywords and phrases "resigned → director," in close character proximity within the document, begins to start accurately classifying director and officer changes.

This would require the keyword to reflect an action, while a second keyword would determine the type of individual being changed, such as a "director," "officer," or "member of the board." This combination of keywords and phrases "resigned → director," in close character proximity within the document, begins to start accurately classifying director and officer changes.

Once an effective configuration of phrases is implemented, each document identified should pertain to the desired event or attribute. Our original 650,000 SEC documents can now be reduced to a small handful containing actionable information. This reduced scope will allow for a more focused effort, even if manual investigation is still required. No single automation effort will fully solve every inefficiency within a system, but the small impacts of multiple efforts will eventually form a functional ecosystem. This leads to a progressive state of event and attribute detection, which is connecting terms and attributes together to develop context.

Obtaining Context from Content

By coupling named-entity recognition (NER), which is simply identifying named entities like organizations and people, with event and attribute detection, a more sophisticated output begins to form. Imagine if NER identified the organization of "Amazon.com, Inc." and the individual "Jeff Bezos," while the event of "officer/director change" was found within the same document using the keywords "retired" and "CEO." The proximity of terms and the language of the sentence would need to be referenced, but the conclusion that "Jeff Bezos, the CEO of Amazon.com, Inc., has retired," could be formed based on the combination of named entities and events.

The power behind drawing accurate conclusions from documents is an extremely valuable skill. Text analytics software still struggles to develop this context accurately at large scale. Humans can develop this context quite easily, but cannot "consume" documents as quickly as a machine. Manual validation is not a sustainable model, which is why text analytics has moved to the forefront of document processing. The use of keywords, patterns, and trends defines the movement of processing large volumes of documents in an efficient manner, but it's the high-level meaning drawn from text that is key. As the business world continues to evolve, so will each of us, as we continue to try and stay ahead of the curve.

No Comments Yet

Let us know what you think