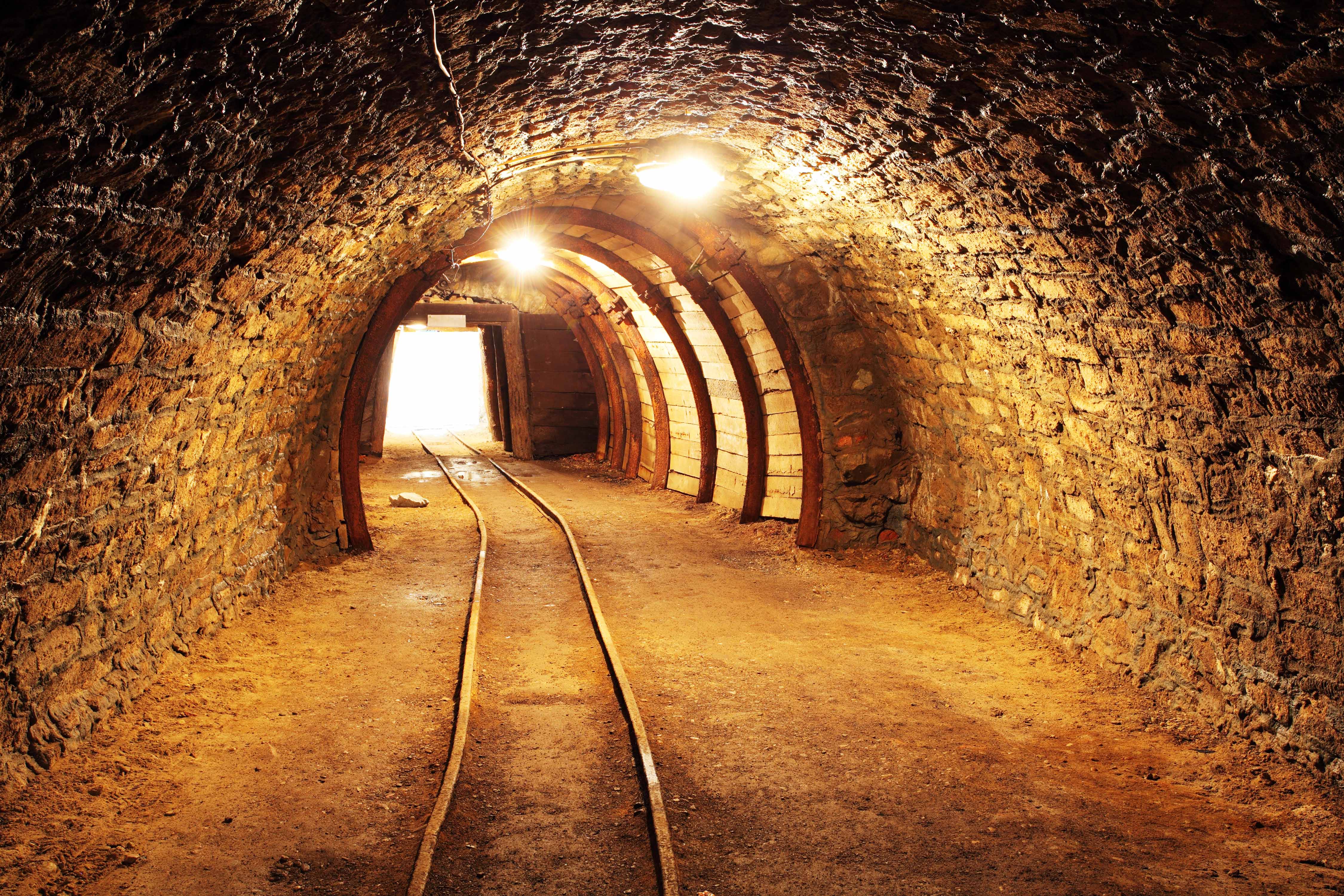

For years and years, regulatory and legal requirements have created hundreds if not thousands of processes that require companies to capture information in documents or records. This information includes details about their customers, suppliers, market activity, and many other facets of their business. I don't know a company on the planet that really values all of this documentation. However, in the era of Artificial Intelligence and Natural Language Processing, we're discovering that all of this documentation may be a goldmine of data for these large enterprises.

Record retention requirements are becoming the friend of data-driven organizations. Nearly every regulation provides guidance regarding record retention. From vendor contracts, legal, accounting, or human resources to more industry-specific requirements related to Bank Secrecy, Anti-Money Laundering, or Know Your Customer, the average record retention requirement is five to seven years. This translates into millions and millions of documents in most large enterprises. Record retention effectively has forced companies to house a large collection of information, which is often trapped inside documents.

This is where AI and NLP come in. Let's say you're working on an organizational wide data strategy. It's highly likely you will need data about legal entities (e.g. customers, partners, service providers, counterparties), people (e.g. employees, customers, vendors) and products (e.g. physical items, securities / financial instruments, goods, services). How many data points could you obtain from all of the records retained related to supplier agreements, transaction records, account authorizations, engagement letters, customer due diligence, and 50 other processes? The answer depends on how large an enterprise is, but I think you get the point here. An enormous dataset lives within all of these documents.

So why is this dataset important? We've noticed leading organizations using AI and NLP to extract data from documents around three key themes:

Some people say AI technologies are a solution looking for a problem. When it comes to the years and years of documentation living in large organizations, I don't know if we necessarily have a problem, but we clearly have an opportunity. Can a $5 million investment in AI and NLP yield $10 million, $20 million, or $50 million in benefit? At Kingland, we think so.

These Stories on Text Analytics

No Comments Yet

Let us know what you think