As more and more unstructured content is generated, the pressure to unlock that data increases with it. At Kingland, we leverage Natural Language Processing (NLP) to analyze large volumes of unstructured data through our Text Analytics capabilities. A fundamental (and possibly the most important) part of NLP is Language Modeling.

Language Modeling (LM) is the process of predicting the next word within a sequence of words (e.g. sentence) while considering/harnessing its locale within a sequence. Natural languages (e.g. English) are a difficult space to model given the diverse usage of terminology, usage changes, and vocabulary. These challenges make it nearly impossible to set up sustainable rule-based grammars and sentence structures to parse and understand language. This is why so many turn to "learning" language by using examples as training material. Some examples where LM is used include:

Traditional LM consisted of probabilistic models leveraging statistical distributions of n-grams to predict the next word in a sentence. N-grams are basically a set of occurring words within a given window. More recent (and increasingly popular) usage of neural networks have shown great promise in outperforming traditional methods, due to their ability to generalize based on the provided training material. They also combat the sparsity issue of n-grams (or n-gram coverage) by word vectors (i.e. numbers that represent the meaning of a word) as inputs. You can read more about this in Text Analysis: Building Neural Networks to Answer Questions about Complex Data.

It is key to note that "perfect" language understanding would likely result in artificial general intelligence. With this in mind, let's discuss how Kingland approaches LM for real world use.

The real question is what preparation is required to train a model. Just like most things in life, there's a price to be paid for success. Data collection, cleansing, and annotation are the lifeblood of training any model, and it's no different in language modeling; these are often the most time consuming and least attractive parts of a data scientist's work, but are the most important steps in training just about any model. Once you have your data well structured and annotated, the actual training is more about what platform and library/libraries you choose. This should be carefully considered with a model management, deployment strategy, and any other testing requirements you have.

For the average individual, reading a legal contract can be uncomfortable, and possibly confusing. This is why we "have our lawyer" read/review these documents. It's also true that a 60-year-old male lawyer may feel the same if reading a fashion blog posted by a 24-year-old. The concept of publisher specific vernacular is also applicable for machines when training them to process and analyze language. To exist in this paradigm, we train and develop language models from a publisher demographic/perspective. By allowing multiple models to co-exist, we embrace vernacular diversity to enable better understanding of written intent. Now, this doesn't mean a custom model is required for everything. The average individual can read and understand quite a bit without major context switching, so a generic language model is also applicable.

One could think of these publisher based language models as an ensemble, where we can call upon dialect where and when necessary. This ensemble requires coordination, which is accomplished through unary classification of the incoming content against the language models available. This allows us to understand whether the incoming content is "speaking" more like one demographic over another, and in turn enabling a better fit language model to be leveraged for Text Analytics.

Named Entity Recognition (NER) is an information extraction technique to identify sub-strings as Named Entities and classify them into pre-defined classes. Let's take an example sentence that can be a mouthful:

On March 26, 2018, Tallgrass Energy GP, LP, a Delaware limited partnership (“TEGP”), Tallgrass Equity, LLC, a Delaware limited liability company (“Tallgrass Equity”), Tallgrass Energy Partners, LP, a Delaware limited partnership (“TEP”), Razor Merger Sub, LLC, a Delaware limited liability company and wholly owned subsidiary of TEP (“Merger Sub”), and Tallgrass MLP GP, LLC, a Delaware limited liability company (“TEP GP”), entered into a definitive Agreement and Plan of Merger (“Merger Agreement”).

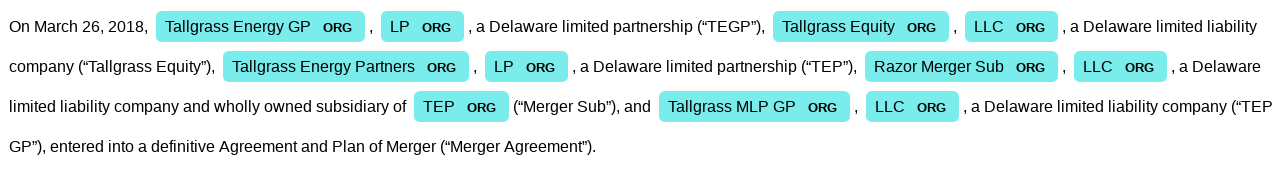

Below is an outline of how a generic language model, trained on news articles, performs when attempting to label ORGs (i.e. companies, agencies, institutions, etc.) for extraction:

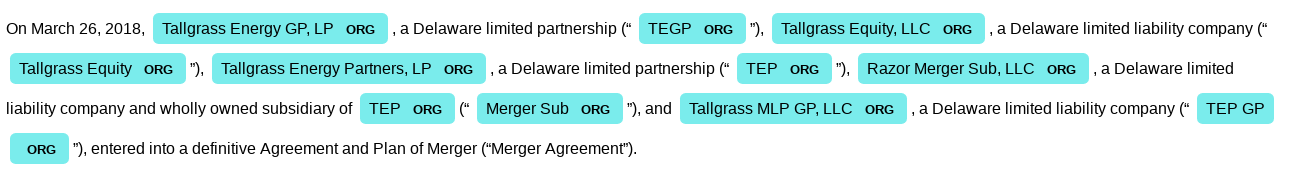

There's good and bad to the generic model, and its attempt at extracting Named Entities is admirable, but we can do even better. By applying a more targeted legalese language model, we can push the accuracy of Named Entity extraction from approximately 32% to greater than 95%:

Within Kingland's Text Analytics capabilities, we leverage language model training to improve the accuracy of Named Entity extraction, and we've found that a properly trained language model can drastically increase the accuracy of extraction, as shown in the simple examples above. Stay tuned as we continue our investment in additional Text Analytics capabilities over the coming months!

No Comments Yet

Let us know what you think